In quantitative trading, there is a typology of systems that is practically impossible, but only two general approaches to constructing them: The analytical and the statistical. Both will face the processes of optimization and selection of strategies in a very different way, affecting even the way in which the results of a backtest are interpreted and evaluated.

APPROACHES TO SYSTEM DESIGN

The analytical approach, also known as the “scientific approach” or “Quant approach”, investigates the processes underlying market dynamics. It analyzes in-depth its large-scale structure and microstructure, trying to find inefficiencies or alpha-generating sources. For example, it conducts studies on:

- The sensitivity to news and the speed with which markets absorb their impact.

- The specific causes of some seasonal phenomena observed in commodity markets.

- Interest rates and their impact on different asset classes.

- The way high-frequency trading alters the microstructure of a market.

- The effect of the monetary mass on the expansive and contractive phases.

- The “size factor”, the “moment” and the dynamics of reversion to the mean.

As a result of these often interdisciplinary and highly complex studies, researchers formulate hypotheses and construct models that they will later try to validate statistically. In this approach, the backtest aims at the historical analysis of the detected inefficiencies and their potential to generate alpha in a consistent manner.

The next step will be the construction of the strategy itself: Operating rules are formulated that reinforce or filter out this inefficiency. Some will be parameterizable and others will not. Therefore, the risk of over-optimization is not completely eliminated. However, the big difference with conventional systems construction is that researchers know in advance that there is a useable inefficiency and More importantly, they have an explanatory model that links it to processes that affect markets and modulate their behaviour.

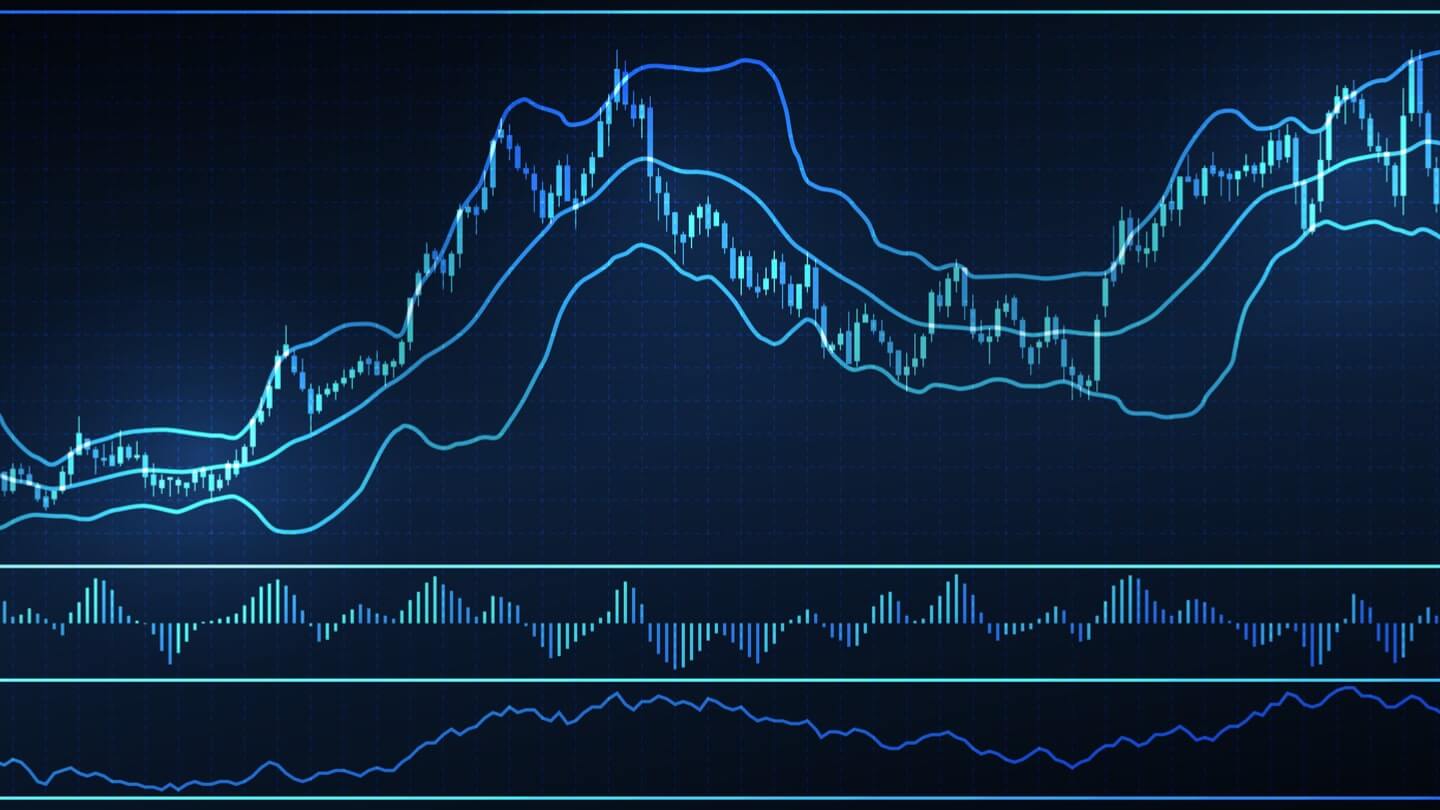

The statistical approach does not pursue knowledge of market dynamics nor does it make previous assumptions about the processes that lead to inefficiencies. Search directly into historical data patterns, cycles, correlations, trends, reversion movements, etc. that can be captured by system rules to generate alpha. The rules can be crafted by manually combining the resources of technical analysis based on previous experiences or using advanced techniques of data mining and machine learning (ML).

Here, backtesting occupies a central place, since it allows us to distill the strategy in a recursive process of trial and error in which an infinite number of rules and alternative systems are tested. Optimization arises from the progressive adjustment of rules and parameters to the historical data series and is calculated by the increase in the value of ratio or set of criteria (Sharpe, Profit Factor, SQN, Sortino, Omega, Min. DD, R 2, etc.) used as an adjustment function.

The big problem with this way of looking at things is that we will never be able to know with certainty whether the results we obtain have a real basis in inefficiencies present in price formations or arise by chance, as a result of over-adjustment of parametric rules and values to historical data. On the other hand, ignore the processes involved in the generation of alpha, converts the system into a kind of black box that will have the psychological effect of making the trader quickly lose confidence in their strategy as soon as the actual operating results start to be adverse.

Obtaining data mining systems need not lead to over-optimised or worse quality strategies than in the analytical approach. In our opinion, the keys to this procedure are:

-Starting from a logic or general architecture consistent with the type of movements you want to capture. This will lead us not to improvise, testing dozens of filters and indicators almost randomly.

-Rigorously delimit the in-sample (IS) and out-sample (OS) regions of the historical, ensuring at each stage of the design and evaluation processes that there is no “data leak” or contamination of the OS region.

Do not fall into the temptation to reoptimize the parameters or modify again and again the rules when we get poor results in the OS.

Keep the strategy as simple as possible. Overly complex systems are authentic suboptimizing machines. The reason is that they consume too many degrees of freedom, so to avoid an over-adjustment to the price series we would need a huge historical one that surely we do not have.

Even taking all these precautions, there is a risk of structural over-optimisation induced by the way such strategies are constructed and which is virtually impossible to eliminate. Takes different shapes depending on whether the strategy construction is manual or automatic:

In the case of craft construction, and even if regions IS and OS are strictly limited, the developer has inside information about the type of logic and operating rules that are working best now and ends up using them in their new developments. Markets may not have memory, but the trader does and cannot become amnesiac when designing a strategy. Perhaps you will be honest and try your best not to pollute the OS with preconceived ideas and previous experiences. But believe me, it’s practically impossible.

In the case of ML the problem is the extremely high number of degrees of freedom with which the machine works. A typical genetic programming platform, such as SrategyQuant or Adaptrade Builder, has a huge amount of indicators, logic-mathematical rules, and input and output subsystems. Combining all these rules, many of which include optimizable parameters, the genetic algorithm builds and evaluates thousands of alternative strategies on the same market. The fact that two or more regions of history (training, validation, and evaluation) are perfectly limited does not prevent many of the top-ranking strategies from operating in the OS by chance, instead of demonstrating some capacity for generalization in future scenarios.

OPTIMISATION AND SELECTION OF STRATEGIES

If we start from a simple dictionary definition, optimization in mathematics and computing is a “method calculate the values of the variables involved in a system or process so that the result is the best possible”. In systems trading, an optimization problem consists in selecting a set of operating rules and adjusting their variables in such a way that when applied to a historical series of quotes they maximize (or minimize) a certain objective function.

Depending on the approach, the concept of optimization takes on different meanings. In the analytical approach it is optimized to:

- Adjust the parameters of a previously built model to the objectives and characteristics of a standard inverter.

- Calibrate the sensitivity of filters that maximize detected inefficiency or minimize noise.

- Adhere to the operating rules to the time interval in which inefficiency is observed.

- Test the effectiveness of rules in different market regimes.

In general, this is a “soft optimization” since the alpha-generating process is known prior to the formulation of the system. In contrast, in the approach based on data mining, optimization is the engine of strategy building. It is optimized for:

- Select the entry and exit rules.

- Determine the set of indicators and filters that best fit the data set.

- Select the robust zones or parametric ranges of the variables.

- Set the optimal value of stops loss and profit targets.

- Select the best operating schedule.

- Adjust the monetary management algorithm to an optimal level in terms of R/R.

- Calculate the activation weights in a neural network.

In the ML world, the historical data set used as IS, and the testing or evaluation period (testing set) to the off-sample or OS data is called the training period (training set). Configurations that are subject to evaluation are called “trials”. And this number of possible trials depends on the complexity of the system to be evaluated. With more rules and parameters, more configurations, lesser degrees of freedom, and greater ability to adjust to the IS series. In other words: The more we adjust and the better the system works on the IS side, the less capacity to generalize, and the worse performance on the OS side.

In a realistic backtest the average results obtained should be in line with those of actual operation. But this hardly ever happens, because during the construction and evaluation process many developers make one of the following five types of errors:

ERROR I: Naïve evaluation. The same data is used to build and evaluate the strategy. The whole series is IS and the results obtained are considered good -perhaps with some cosmetic corrections. It is surprising to find this error even in academic papers published in prestigious media. In our opinion, any backtest that does not explain in detail how it was obtained is suspicious of this error. And here it is not worth putting the typical warning that it is “hypothetical data”: They are not hypothetical, they are unreal! And the whole industry knows, except the unsuspecting investor.

ERROR II: Data contamination. Due to the poor design of the evaluation protocol, data are sometimes used in the OS that has already been used in the IS. This usually happens for two reasons:

The IS-OS sections have not been correctly delimited in the evaluation phase. For example, when performing a walk-forward or cross-validation. Information from the OS has been used to re-optimise, or worse, to change the rules of the strategy when the results are considered too poor.

ERROR III: Historical too short. There is not enough history to train the strategy in the most common regime changes in the markets. In this case, what we will be building are “short-sighted” systems, with a very limited or no capacity to adapt to changes.

ERROR IV: Degrees of freedom. The strategy is so complex that it consumes too many degrees of freedom (GL). Such a problem can be solved by simplifying the rules or increasing the size of the IS.

In a simple system, it is easy to calculate the GL and the minimum IS needed. But what about genetic programming platforms? How do we calculate the GL consumed by an iterative process that combines hundreds of rules and logical operators to generate and evaluate thousands of alternative strategies per minute in the IS?

ERROR V: Low statistical significance. This usually happens when we evaluate strategies that generate a very small number of operations per period analyzed. With very few operations the statistical reliability decreases and the risk of over-optimization skyrockets.

We can approximate the minimum amount needed for a standard error that is acceptable to us, but it is impossible to establish a general criterion. Among other things because the amount of operations is not evenly distributed in the different periods. For example, when we work with volatility filters we find years in which a high number of operations are generated and others in which there is hardly any activity. Does this mean that we will reject the system because some OS cuts, obtained by making a walk-forward of n periods, have low statistical reliability? Obviously not. The system works according to its logic. So what we could do is review the evaluation model to compensate for this contingency.

Another example would be the typical Long-Term system applied in time frames of weeks or months and that does at most ten operations per year. In these cases the strategy cannot be analysed asset by asset and the only possible analysis is at the portfolio level, mixing the operations of the different assets, or building larger synthetic series for a single asset.

A cost-effective and fully functional strategy does not need to meet all these criteria; it is a table of maxima. However, as long as it does, we will have more reliable and robust systems.

On the other hand, this issue does not look the same from the point of view of the developer and the end-user of the system. As developers, we will approach these criteria as long as we have a well-defined protocol that covers all stages of design and evaluation. And, of course, if we also want to meet the first requirement, we will have to spend a lot of time on basic market research.

When the strategy is designed for third parties, these requirements are only met under the principle of maximum transparency: The developer must provide not only the code but all available information about the process of creating the strategy. Actually, this is not why the vast majority of systems available on the Internet to retail investors by sale or subscription. That’s why we’re so skeptical of “black box” or similar strategies. We don’t care if someone puts wonderful curves and fable statistics on their website. That’s like wrapping a box of cookies in a supermarket: If we can’t taste the product, we can’t say anything about it.