It’s cloudy. Every minute, the number of clouds doubles, and in 100 minutes the sky will be covered. How many minutes will it take the clouds to occupy half the sky? 50 minutes. It is the answer that is usually heard in this version of the riddle. However, if the clouds double every minute, when they cover the whole sky it means that the minute before they cover just half. So the correct answer is 99 minutes.

Fast and Slow Thinking

It wasn’t a complicated riddle. But to solve it you had to think slowly. In his excellent fast-thinking, slow thinking, D. Kahneman, father of behavioral finance, described the two ways we process information:

A quick, emotional and intuitive one. It gets stuck before problems that require evaluation and logic. Professionally known as System 1, colloquially Homer.

A slow and rational, requiring higher energy expenditure. Known as System 2 or Mr. Spock for friends.

We are Homer by default. It is enough for the day-to-day. With Spock in charge, it would take hours to solve simple operations, like buying food or choosing the color of the tie.

System 1 is efficient and consumes less energy. In return, it takes a series of shortcuts that cause mental traps. For example, how many times do you think you could fold a sheet of paper? It seems a simple task, but I bet with you you wouldn’t be able to do it more than 12 times. Just take the test.

In fact, it was thought impossible to fold a sheet more than 8 times until in January 2002, B. Gallivan explained how to get there at 12 in his book How to Fold a Paper in Half Twelve Times. You read it right: a book.

Incredible, isn’t it? If it wasn’t for the fact that mathematics assures you that it is, you wouldn’t believe it. It’s not something you can imagine: you have to do an exercise in faith in science.

We Are Fooled

Imagine that we found a way to bend it more than 12 times. For example, 20. What will be thicker: the pipe of a pipeline or our sheet?

A folio is about 0.1 mm thick. If we fold it in half, we will have 0.2 mm. We fold again and have 0.4 mm. At the seventh, the thickness will be similar to that of a notebook. Around 23 times we will reach 1 Km. In 42, our folded folio would reach the moon, in 52 to the sun. 86 folds later, it will be the size of the milky way and 103 folds the size of the universe. Math, son.

We can’t imagine it. Mathematics claims it’s true, but the mind resists it. Only with experience, knowledge, and the right tools will we know when System 2 needs to be implemented to reach successful conclusions.

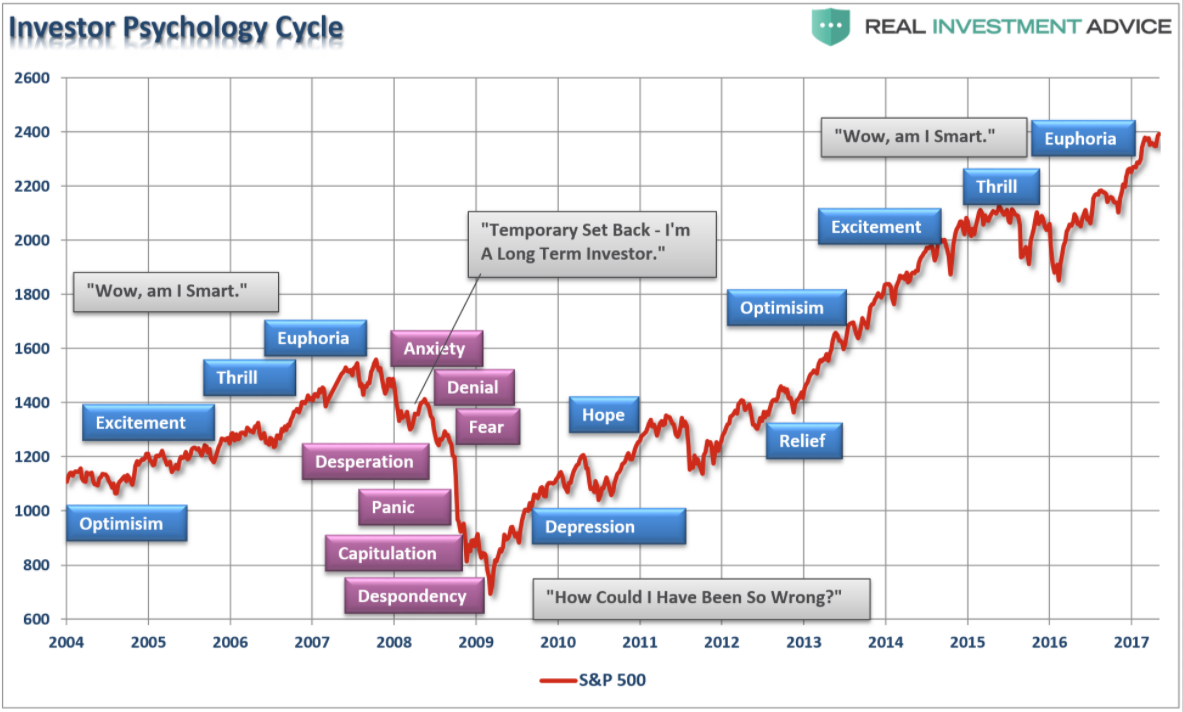

Confused by the Randomness

You will agree with me that any good operation is one that you would repeat time and again provided that certain conditions are met, regardless of the outcome of a particular trade. This statement implies that any operation, despite being perfectly planned, can end badly. That is to say, investment in financial markets requires us to face important doses of randomness.

And the bad news is that the deceptions of System 1 are multiplying in activities whose results are influenced by probability. Actually, Homer thinks he can influence her.

A few years ago, a BBC reporter showed that at many Manhattan traffic lights there is no connection between pressing the “green wait” button and the time it takes for the record to change color. Corroborated by the New York Times, it was noted that it occurred in other cities (e.g., London). As pedestrians feel they can control the situation, they tend to cross less in red.

This trap is known as the “illusion of control”: we believe we can influence things over which we have no power. For example, when we blow into the fist or shake the dice vigorously before throwing them. Or when we attribute to our superior analysis the winning operations and to the unlucky losers (something that also fits with another mental trap known as “attribution bias”).

In this sense, a 2003 study by Fenton-O’Creevy et al showed that traders more prone to the illusion of control had lower performance, worse analysis, and worse risk management.

Correlation, Causality, and Chance

This need for control leads us to look for cause-effect relationships to explain random phenomena. Unfortunately, Homer is not a scientist identifying patterns and there are few sites like financial markets to find ridiculous patterns. Thus, there are hundreds of published books that are authentic compendiums of false correlations.

It is important to understand that correlation does not imply causality and that it is not enough that a system has worked in order to extrapolate it to the future. The system, besides being useful, must make sense.

Chalmers, inspired by B. Russell, explained it well in his inductive turkey story. A turkey, from its first morning, received food at 9 o’clock. As it was a scientific turkey, he decided not to assume that this would always happen and waited for years until he collected enough observations. Thus, he recorded days of cold and heat, with rain and with the sun, until finally, he felt sure to infer that every day he would eat at 9 o’clock. And then, Christmas Eve arrived, and it was he who became the meal.

In 1956, Neyman (later corroborated by Hofer, Przyrembel, and Verleger in 2004) showed that there is a significant correlation between the increase in the stork population in a given area and the birth rate in that area. Cause?

Depends on the Question

Most of the decisions we make are often influenced by how we are presented with information, or how the question is asked. For example, we will be more willing to sell a share priced at EUR 50 if we buy it for EUR 40. However, if the previous day’s closure was EUR 60, we will be more reluctant to do so.

Imagine you have to choose between these options:

800 USD with security.

Do not lose anything with 50% probability or -1,600 USD with 50% probability.

Although the expected value is the same (0.5 x -1,600 + 0.5 x 0 = -800 USD), the second option is usually chosen.

Let’s put it another way:

+800 USD with security.

Do not earn anything with 50% probability or +1,600 USD with 50% probability.

Many people will now choose the first option. By showing the same exercise as a gain rather than a loss, the mental process leads to different paths.

Aversion to the Loss

The above example also demonstrates the “loss aversion bias”. We are more pained by a loss than by a gain of the same magnitude. This is one of the causes of the well-known “disposition effect”: the tendency to close profits ahead of time and let losses run away.

We cannot avoid the Disposition Effect. In fact, it is rooted in our primate nature, as demonstrated by K. Chen and L. Santos of Yale University, studying a group of capuchin monkeys.

These monkeys had been educated to exchange small coins for fruits. When they “bought” a grape, one of the researchers would throw the coin in the air, and if it came out face up he would give it two grapes, if it was a cross, then only one. Another researcher, when given the coin, showed two grapes. Then he threw the coin in the air, and if it came out expensive, he gave both grapes, and if it came out, he gave one and kept the other.

On average, they received the same number of grapes with both researchers, but one showed them as a potential gain and another as a potential loss. Soon, the monkeys began to exchange only with the investigator who did not show the two grapes. The suffering of losing a grape was greater than the satisfaction of winning it.

We can’t help it. But L. Feng and M. Seaholes showed in a 2005 study how the experience allowed for significant attenuation.

Aversion to the Losses

Perhaps because we do not know how to decide in an environment of uncertainty, we do not know how to evaluate the decisions made by others. In a 1988 study, J. Baron and J. Hershey asked a subject to choose from:

- Get 200 USD for sure.

- Get 300 USD with 80% probability or 0 USD with 20% probability.

A priori, the most logical thing is to take risks since its expected value is 240 USD (300 x 80% + 0 x 20%), higher than 200 USD insurance. But what was sought was not to evaluate the wisdom of the one who chose, but how others valued the choice. Therefore, once the result was known, different people were asked what they thought about the decision taken, being -30 the worst and +30 the best.

The valuation was +7.5 when the subject took risks and won and -6.5 when he lost. This implies that the subject is valued not for making the most logical decision, but for its result.

External Influences

When we make decisions, we are also influenced by what others think, by what others expect of us, and even by what others order.

Asch showed the difficulties of going against the tide. In his classic study, he showed tokens with three lines of different sizes to groups of students. They were all in cahoots except one, the subject of the study. It was asked to select the largest line. The accomplices had to say sometimes right and sometimes wrong answers. When they said the right answer, the subjects did not usually fail. But when the group gave the wrong answer, the subjects failed almost 40% of the time, even though the lines were several centimeters apart.

Stanford’s terrible prison experiment shows the influence of what others expect of us. A group of young people were selected and randomly divided between prisoners and prison guards. Prisoners were required to wear robes and were designated by numbers, not by name. The only rule of the guards is that they could not use physical violence.

On the second day, the experiment went completely out of control. The prisoners received and accepted humiliating treatment at the hands of the guards.

Even more terrible is the study of Stanley Milgram, from Yale University. This experiment used three people: a researcher, a teacher (the subject), and a student (an accomplice actor). The researcher points out to the teacher that he must ask the student questions and punish with a painful punishment every time he fails. Initially, the discharge is 15 volts and increases for each failure for several levels up to 450 volts.

The student, as the downloads rise level simulates gestures and cries of pain. From 300 volts it stops responding and simulates seizures.

Normally starting at 75 volts, teachers would get nervous and ask to stop the experiment. If this happened, the investigator refused up to four times, noting:

- Go on, if you please.

- The experiment requires you to continue.

- It is absolutely essential that you continue.

- You have no choice. You must continue.

- On the fifth attempt, the experiment stopped. Otherwise, it continued.

All subjects asked at some point to stop the study, but none passed five attempts before the 300 volts. 65% of the participants, although uncomfortable, reached up to 450 volts.

If you think that you would never fall for something like this, keep in mind that both studies have been repeated at different times with different modifications, reaching similar results.

What Can We Do?

Now you know. Your mind deceives you and conspires against you. You can’t help it, but you can avoid falling into its traps if you understand how you are deceived. There are hundreds of resources (books, articles, etc). Use them. And remember: to be brave it is indispensable to be afraid.